LLMs have moved from research labs into production environments at an unprecedented pace. They’re powering SaaS platforms, automating financial operations, transforming healthcare, and even supporting critical national security workloads. But as adoption accelerates, so do the attacks that target the infrastructure that supports them.

Here’s the problem: most “AI security” conversations are stuck at the application layer. We talk about prompt injection, data poisoning, model theft, and API security – and those are undoubtedly incredibly important issues – but they’re not the only place where risk lies.

The reality is that LLMs don’t run in isolation. They rely on a complex stack of servers, accelerators, firmware, and cloud infrastructure. If attackers compromise any layer below the model, such as the BIOS firmware, everything built on top of it is exposed.

Right now, this is the blind spot most organizations haven’t addressed.

The Expanding Attack Surface of LLM Infrastructure

The infrastructure running today’s LLMs isn’t simple. Models are deployed across clusters of GPUs, TPUs, and high-density servers, often spanning multiple data centers and cloud regions. This distributed architecture improves performance, but it also introduces new vulnerabilities.

Adversaries are adapting quickly. Over the past 18 months, we’ve seen a rise in threats aimed at the firmware layer, targeting the foundation beneath the OS. These attacks don’t look like the ones most security teams are used to defending against.

Unlike API exploits or web app vulnerabilities, firmware-level compromises are persistent, stealthy, and hard to detect. Once an attacker implants malicious code into BIOS/UEFI firmware, it survives OS reinstalls, wipes, and even system reimaging. That means an attacker can control the system from power on, before the OS launches and your endpoint detection tools even load.

We’re also seeing attacks against GPU and accelerator firmware. Because LLM workloads are so GPU-intensive, adversaries know this is where sensitive model weights and inference pipelines live. A modified GPU driver or firmware component could silently intercept or manipulate LLM outputs, exfiltrate proprietary models, or even inject subtle data poisoning into AI systems at scale.

This isn’t hypothetical. Security researchers have already documented UEFI rootkits, GPU firmware exploits, and BMC abuse cases targeting enterprise and cloud environments. These are the very same environments that now host the majority of production AI workloads.

The takeaway is simple: if you’re only securing the application layer, you’re already behind.

Why Firmware Matters in AI Security

Security engineers often assume the OS is the root of trust, but in modern AI infrastructure, that’s no longer true. The real root of trust begins below the OS, in firmware and hardware.

Firmware controls the boot process, manages hardware resources, and establishes the environment in which everything else, from the OS to the LLM itself, runs. If it’s compromised, every other security control above it becomes irrelevant.

Attackers know this, which is why BIOS/UEFI firmware have become such attractive targets:

- Persistence: Firmware implants survive reboots and reinstalls, giving adversaries long-term access.

- Privilege: A compromised BIOS operates with higher privileges than the OS itself.

- Blind spots: Most EDR/XDR, SIEM, and vulnerability scanners don’t validate firmware integrity, meaning attacks often go unnoticed.

In the context of LLMs, this is particularly dangerous. If an attacker implants code at the firmware level, they can:

- Intercept model inference requests

- Exfiltrate proprietary weights and fine-tuning data

- Manipulate responses before they leave the server

- Introduce poisoned training data at scale

In other words: own the firmware, and you own the model.

The Hidden Weak Points in AI Infrastructure

When organizations think about AI security, they usually focus on access control, API gateways, and model-level protections. But LLM infrastructure has hidden weak points most security teams aren’t monitoring:

- BIOS / UEFI vulnerabilities → Persistent rootkits at the foundation of system trust.

- BMC / IPMI takeover → Abusing remote management controllers to bypass OS-level security.

- Cloud hypervisor attacks → Compromising multi-tenant AI hosting environments to access co-located workloads.

- Supply chain implants → Malicious firmware shipped with preconfigured accelerators, motherboards, or servers.

The common thread? These layers operate below the visibility of traditional security tools. Without explicit firmware integrity checks, it’s nearly impossible to know whether the hardware running your LLMs is trustworthy.

Why This Problem Gets Harder in the Cloud

LLMs are increasingly deployed in cloud and hybrid environments where infrastructure is shared across multiple tenants. That adds an extra layer of risk.

In multi-tenant AI hosting environments, attackers don’t even need to compromise your own servers, they can go after the underlying hypervisor, GPUs, or management interfaces that serve multiple customers at once. A single firmware exploit in a shared GPU driver, for example, could allow lateral movement across entire clusters.

Supply chain risk compounds the challenge. Many enterprises don’t own or directly manage the hardware running their models; they rely on third-party providers. If firmware is already compromised before deployment, you’ll inherit that vulnerability without knowing it, unless you’re proactively verifying hardware trust.

Securing the Root of Trust with Firmguard

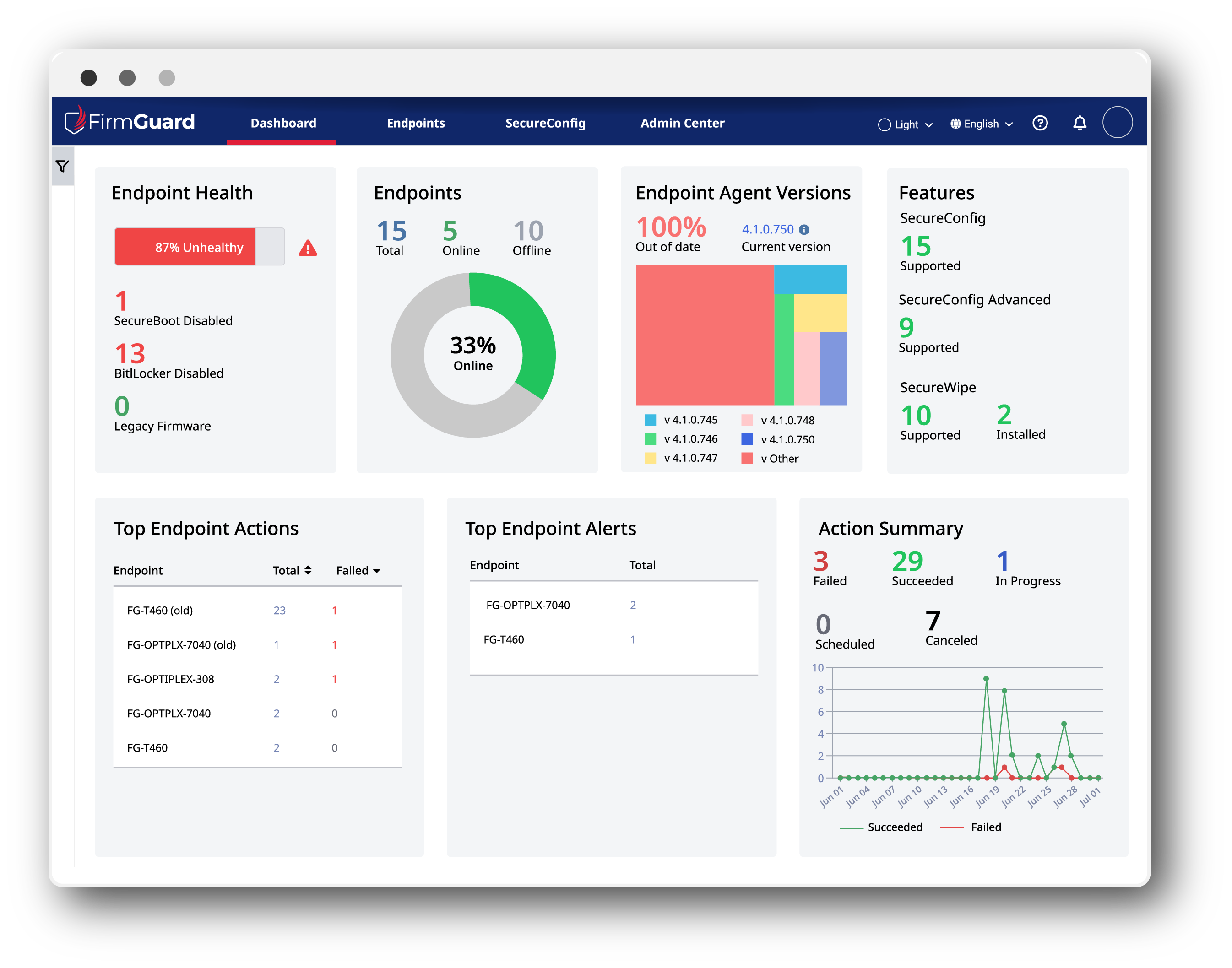

This is where Firmguard focuses: securing the infrastructure layer that everything else depends on.

FirmGuard continuously monitors BIOS firmware integrity so if an attacker implants code or modifies firmware, we detect it in real time. That capability matters because firmware compromises don’t just put a single server at risk, they can cascade. If one endpoint is compromised in an LLM cluster, attackers can move laterally and potentially manipulate or exfiltrate the entire model-serving infrastructure.

With Firmguard, security teams gain:

- Remote BIOS management to verify firmware integrity at scale

- Initiate policy-based remote data wipes that are cryptographically verifiable and fully auditable

- Reimage compromised endpoints to a known-good state – without touching the machine

Securing AI infrastructure without securing firmware is like locking the front door while leaving the basement wide open. Firmguard closes that gap.

Practical Steps Security Engineers Should Take Today

Even with the right tooling, firmware security requires a shift in mindset. Here’s where to start:

- Establish known-good baselines for BIOS/UEFI firmware across all LLM-serving infrastructure.

- Enable secure boot and hardware-based roots of trust to prevent unverified code from loading during system startup.

- Integrate remote attestation into your SOC workflows, especially for distributed and cloud-hosted workloads.

- Incorporate firmware compromise scenarios into your incident response plans.

These aren’t nice-to-haves anymore. As attackers continue shifting down the stack, ignoring firmware security isn’t an option.

In AI Security, the Foundation Matters

AI and LLMs are changing how we work, but they’re also changing how adversaries attack. Threat actors are moving beneath the application layer, targeting BIOS/UEFI firmware, and management controllers because that’s where traditional defenses are weakest.

If you don’t control the firmware, you don’t control the infrastructure. And if you don’t control the infrastructure, you can’t secure your models.

Securing LLMs isn’t just about protecting prompts, weights, or APIs. It’s about protecting the foundation those models depend on. At Firmguard, we help organizations defend that foundation, giving security teams visibility into the hardware and firmware layers so they can deploy AI with confidence. Book your FirmGuard demo today.